The evidence in favor of the positive benefits of school choice grew considerably stronger this month with the release of a new methodologically rigorous evaluation of the D.C. Opportunity Scholarship Program.

The D.C. Opportunity Scholarship Program is a school voucher program in Washington, D.C., set up by Congress, which provides students with scholarships to attend private schools of choice.

Kaitlin Anderson and Patrick Wolf released “Evaluating School Vouchers: Evidence from a Within-Study Comparison” in order to compare the performance of quasi-experimental methods to the “benchmark” outcomes from a prior experimental evaluation of the D.C. Opportunity Scholarship Program that Wolf conducted from 2004 to 2009.

Anderson and Wolf re-examined the test score impacts found in the earlier random assignment evaluation. That earlier evaluation found that although reading scores increased, increases were not statistically significant, and could have been due to chance.

The analytic method Wolf and Anderson use in this new evaluation is different enough from Wolf’s initial experimental evaluation that it qualifies as a second D.C. school choice experimental study.

In this updated evaluation, a reliable instrumental variables (IV) approach enabled the authors to account for the fact that some students in the control group (those who were awarded a scholarship but didn’t use it) actually did end up going to private school, just not through the D.C. scholarship program. The IV technique also enabled them to account for the inverse problem—that of scholarship awardees being counted in the treatment group, even if they didn’t use the scholarship.

Unlike in the prior evaluation, they were able to leverage the IV technique to demonstrate that the D.C. Opportunity Scholarship Program had a statistically significant positive effect on the reading outcomes of participating students two, three, and four years after beginning participation in the program, with the strongest gains accruing in the fourth year.

Although reading achievement was positive and significant, math effects remained null, consistent with previous findings.

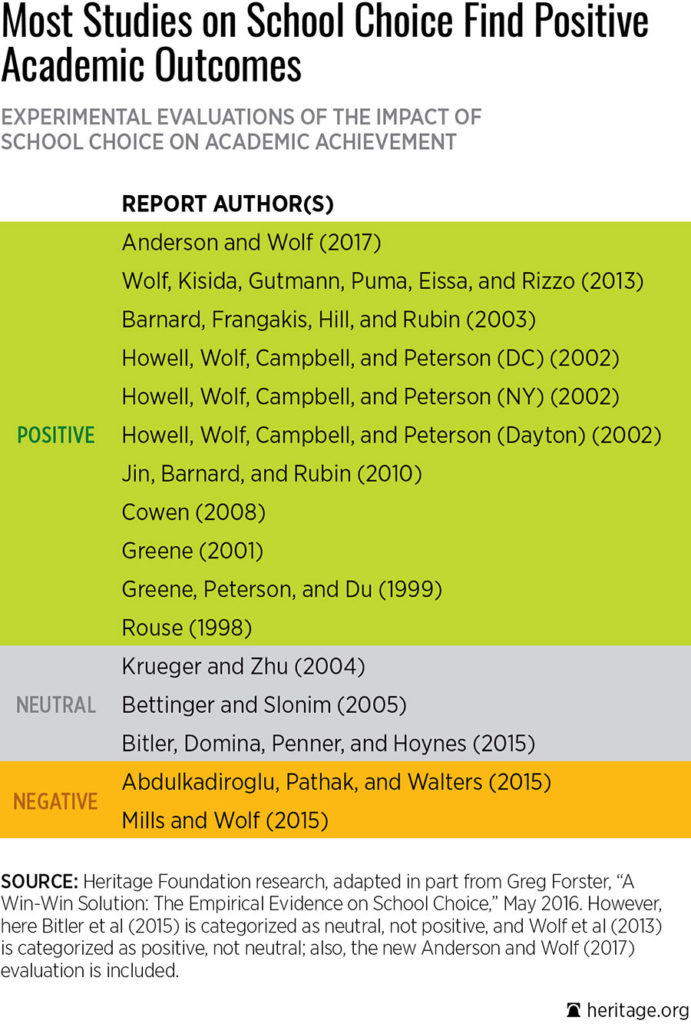

This new study should now count as a new experimental evaluation of the effect of voucher use on student achievement, raising the tally of such evaluations from 15 to 16 in all.

Moreover, this new evaluation is particularly important because, as the authors explain, “the logical explanation for that result is that students who participated in the [Opportunity Scholarship Program] were even more disadvantaged than their demographics suggested, thus completely contradicting the ‘cream-skimming’ claims of school choice opponents.”

For example, they find that students who apply for a voucher may be negatively selective on unobservable characteristics not captured in test scores, as it is possible that parents seek out school choice options when their children are struggling the most.

If true, this runs counter to the argument frequently made by school choice critics that choice programs “cream skim” the “best” students. They suggest that quasi-experimental methods that include both applicants and non-applicants likely undercount the positive impacts of choice as a result of this negative selection.

Conversely, however, quasi-experimental techniques may overestimate the impact of voucher use when the analysis is limited to applicants alone. In both cases, however, “the quasi-experimental estimates were wrong but in one case they were wrong low and in the other case they were wrong high.”

The ability to employ random assignment methods in education research is important, but rare. Random assignment requires oversubscription of a given program, which often then leads to a lottery.

A lottery—such as the one used when there are more applicants than available scholarships, as in the D.C. Opportunity Scholarship Program—allocates vouchers randomly to students who apply.

Researchers are then able to assess achievement and other outcomes of three sets of students: (1) those who applied for, received, and used the scholarship (the treatment group); (2) those who applied for, received, but didn’t necessarily use the scholarship (voucher recipient group); and (3) those who applied for and did not receive a scholarship (control group).

Notably, observed differences between the treatment and control group account for motivation and selection bias, “as mere chance replaces parental motivation as the factor that determines whether a child gains access to school choice.”

The causal impacts that can be inferred from experimental evaluations make them the gold standard of research—analogous to a double-blind trial in medical research—and are the “ideal counterfactual, representing what would have happened to the randomized treatment group (a.k.a. lottery winners) absent the intervention.”

This new, important evaluation by Anderson and Wolf adds to a growing body of experimental evidence pointing squarely in favor of choice producing benefits for participants.

In all, today, there are now 16 experimental evaluations of school choice on academic achievement. Eleven find positive impacts, three find neutral or null effects, and two—both from uniquely prescriptive Louisiana—find negative effects.

The evidence on school choice is overwhelming in favor of its merits. This additional new rigorous evaluation should inform policy moving forward.